Meta: Criticism of retreat from independent fact-checking

Meta is dispensing with independent fact-checking and introducing so-called “Community Notes” on Facebook, Instagram and Threads. The company will also change some of the guidelines for content on its platforms. Experts warn of a surge in disinformation and hate speech.

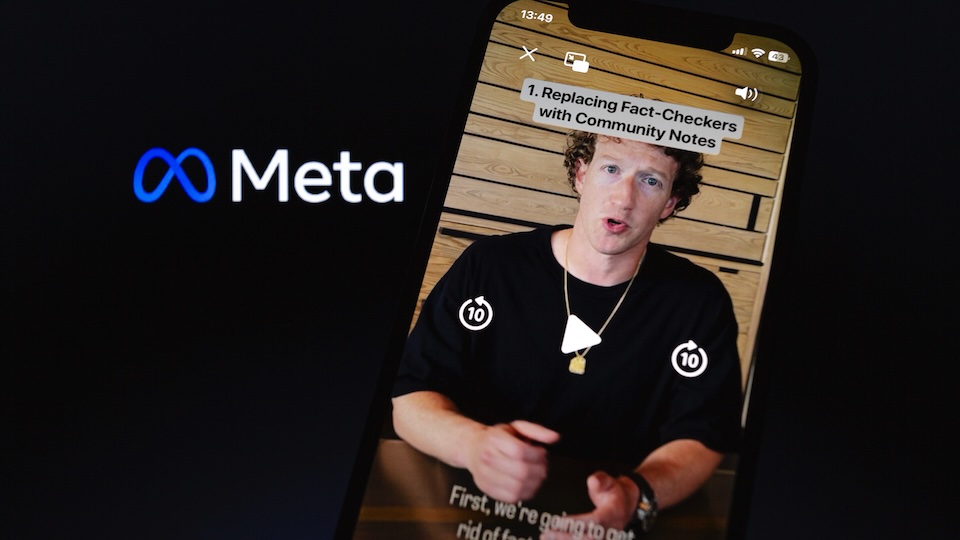

As Meta’s CEO Mark Zuckerberg announced on Tuesday, independent fact-checking by external partners will be replaced by a new feature called “Community Notes.” Users will be able to add context to “potentially misleading” posts. In making the change, Meta is following the example set by X, formerly known as Twitter. That platform shifted heavily toward its Community Notes feature after it was acquired by billionaire Elon Musk – who fired many of the company’s content moderators. X has been criticized for allowing more hate speech and right-wing extremist content since making the change.

The Community Notes feature will be introduced on Meta’s platforms in the coming months. Notes pointing users to additional information will be less visible: before now, to view posts flagged by fact-checkers, users had to first click through a notice before viewing the actual post. In the future, posts will appear as normal and be accompanied by a separate notice indicating that additional information is available. Users will have to take the additional step of clicking on this notice in order to access the information.

Zuckerberg also announced that limits on content related to certain topics like immigration will be lifted in the coming weeks.

The company will also change how it enforces its rules: in the future, Meta’s automated moderation systems will only target “illegal and high-severity violations” – content relating to terrorism and drugs, for example. For “less severe policy violations,” the company will only take action after users have reported an issue.

Years-long partnership with fact-checkers

Meta introduced independent fact-checking in 2016. After the election of Donald Trump that year the company had come under pressure for its role in the spread of misinformation – and responded by partnering with well-regarded external fact-checking organizations. These included the Associated Press, which ended its partnership with Facebook a year ago.

According to an article in Wired magazine, Meta’s current US partners were not warned in advance of Meta’s decision – and first learned that their partnerships with the company would be ending in Zuckerberg’s Tuesday announcement video.

US media outlets like the New York Times view the announcement as a “stark sign” of the company’s move to reposition itself in advance of Donald Trump’s second term as US president, which begins later this month. Though fact-checking organizations have explained repeatedly that they fact-check claims from all political camps, Republicans in the US have claimed that social networks censor “conservative voices.” After the announcement, Republican Senator Rand Paul of Kentucky said in a post on X that Meta “finally admits to censoring speech.” The change in policy was “a huge win for free speech.”

In the last week, Meta promoted Joel Kaplan – “the highest-ranking Meta executive closest to the Republican Party,” according to the New York Times – to a more prominent post and announced that Dana White, an ally of Donald Trump, would join its board. In his announcement on Tuesday Zuckerberg himself referred to the fact-checking on Meta’s platforms as “censorship.”

Neil Brown of the Poynter Institute sharply criticizes this charge. The institute founded the International Fact-Checking Network in 2015 and runs its own fact-checking outlet, PolitiFact, which counts Meta among its clients. Brown said of Zuckerberg’s statement, “It perpetuates a misunderstanding” of Meta’s own fact-checking program. “Facts are not censorship. Fact-checkers never censored anything.” Brown pointed out that Meta “sets its own tools and rules.” According to PolitiFact, the decision to remove content or not was always made by Facebook – not the external fact-checker.

Said Brown, “It’s time to quit invoking inflammatory and false language in describing the role of journalists and fact-checking.”

Criticism of the shift

The human rights organization Article 19 criticized Zuckerberg’s attempt to present the changes as a measure to protect freedom of expression – in reality, the company has revealed “a troubling willingness” to subordinate itself to a political agenda. Article 19 fears negative consequences for user safety and criticizes Meta for placing corporate interest over respect for human rights.

German internet policy journalist Markus Beckedahl wrote that Zuckerberg was “kowtowing” to Republicans.

Warnings of more false information

Experts also warn of the consequences of the planned changes. Alex Mahadevan of the Poynter Institute told the New York Times that he expected the introduction of Community Notes to be a “spectacular failure.”

Valerie Wirtschafter of the Brookings Institute, a think tank, told the newspaper that a feature like Community Notes can be “one piece of the puzzle” – but it can’t be seen as the whole solution.

Imran Ahmed, CEO of the Center for Countering Digital Hate, charged Meta with “turbocharging” the spread of lies and hate.

The US consumer advocacy group Public Citizen characterized the end of fact-checking as “wrong and dangerous.” “Misinformation will flow more freely with this policy change,” said co-president Lisa Gilbert in a statement.

Nicole Gill of the organization Accountable Tech warned that dispensing with external fact-checking would lead to a “surge of hate, disinformation and conspiracy theorists” on the platform – and could lead to real-world violence.

Only in the US, for now

Meta works with external fact-checkers outside the US as well. In Germany for example its partners include a subsidiary of the DPA news agency and the media company Correctiv.

In Europe these partnerships will apparently continue. Responding to a query from the news site Politico, Meta clarified that there were no plans to end its fact-checking practices in the EU. The company will review its obligations in the EU before making changes to its content moderation policy. (js)